When to use the microservice architecture: part 1 - the need to deliver software rapidly, frequently, and reliably

Public workshops:

- Enabling DevOps and Team Topologies Through Architecture: Architecting for Fast Flow JNation, May - Coimbra, Portugal Learn more

- Designing microservices: responsibilities, APIs and collaborations DDD EU, June 2-3, Antwerp, Belgium Learn more

Contact me for information about consulting and training at your company.

Updated October 2023

This is part 1 in a series of blog posts about why and when to use the microservice architecture. Other posts in the series are:

- Part 2 - the need for sustainable development

- Part 3 - two thirds of the success triangle - process and organization

- Part 4 - the final third of the success triangle - architecture

- Part 5 - the monolithic architecture

Introduction

The microservice architecture is an architectural style that structures an application as a collection of services that are

- Highly maintainable and testable

- Loosely coupled

- Independently deployable

- Organized around business capabilities

- Owned by a small team

It’s important to remember that the microservice architecture is not a silver bullet. It should never be the default choice. However, as I wrote recently, neither should the monolithic architecture:

Instead, both architectures are patterns that every architect should have in their toolbox.

Whether or you not you should use the microservice architecture for a given application can be summed up as ‘it depends’. A longer and perhaps more helpful guideline is:

IF

you are developing a large/complex application

AND

you need to deliver it rapidly, frequently and reliably

over a long period of time

THEN

the Microservice Architecture is often a good choice

This is the first of the series of articles that expands on that guideline and attempt to explain when you should consider using the microservice architecture. It describes why IT must deliver software rapidly, frequently and reliably (DSRFR). Later articles explain how DSRFR requires your architecture to have certain properties, which in turn requires you to choose between the monolithic and microservice architectures.

Let’s first look at why IT must deliver software rapidly, frequently and reliably

Two trends requiring IT to deliver software rapidly, frequently and reliably

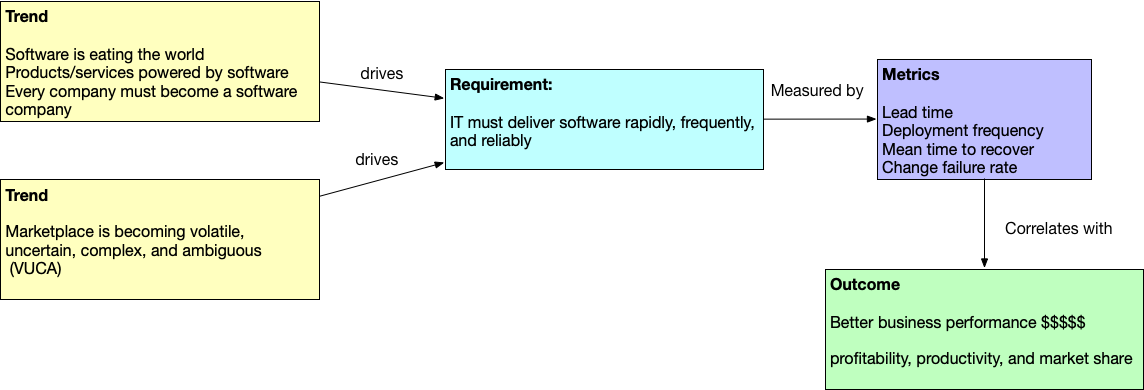

There are two trends that are changing how IT delivers software (and driving the adoption of microservices).

Trend #1 - software is eating the world

The first trend is, as Marc Andreessen said back in 2011, software is eating the world. In other words, every company, regardless of its industry, needs to become a software technology company. It doesn’t matter whether you’re in banking, insurance, mining, aviation. Your products, your services, are all powered by software.

October 2023 update:

This article was written in February 2020, just before COVID-19 pandemic really impacted the world. Here’s an article, I wrote in May 2020 about the impact of COVID-19 on online grocery delivery companies. And since COVID, there have been other major world events, including wars. Yet more clear illustrations of how unpredictable the world is.

Trend #2 - the marketplace is becoming increasingly volatile, uncertain, complex and ambiguous

The second trend that’s driving the adoption of microservices is that the marketplace within which companies operate is becoming increasingly more volatile, more uncertain, more and more complex, and more ambiguous(a.k.a.VUCA). For example, traditional brick and mortar banks in Singapore and Hong Kong have or will soon have multiple new competitors: virtual banks. And, in the UK, insurance companies are must now compete against Insurtech startups that offer innovative new products, such as Zego, which provides hourly commercial insurance for gig economy workers.

Businesses need to be more nimble and agile => IT needs to accelerate software delivery

Because of these two trends, businesses today must be much more nimble, much more agile, and they must innovate at a much faster rate. And, since these innovative products and services are powered by software, IT must deliver software much more rapidly, much more frequently, and much more reliably.

Quantifying rapidly, frequently and reliably

One of my favorite books about high performance IT organizations is Accelerate by Nicole Forsgren, Jez Humble and Gene Kim. It introduces four metrics that the authors have found to be a good way to quantify rapid, frequent, and reliable delivery of software. The first two metrics measure velocity

- lead time - the time from the developer committing a change to that change going into production

- deployment frequency - how often are changes being pushed into production, which you can measure that in terms of deploys per developer per day.

Both these metrics are very much based on Lean principles. Reducing lead time is important because it results in faster feedback. Also, a shorter lead time means that a bug in production can be fixed much faster.

Accelerate uses deployment frequency as a proxy for batch size, which is another important lean concept. Reducing batch size has numerous benefits including faster feedback, reduced risk, and increased motivation.

The second two metrics measure the reliability of software delivery:

- mean time to recover from a deployment failure

- change failure rate - what percentage of deployments result in an outage or some other problem?

Obviously, we want to minimize these two metrics. We must reduce the likelihood of a change causing a production outage. But if it does, we must be able to quickly detect, diagnose, and recover from that failure.

How rapid? How frequent? How reliable?

The following diagram shows the results of the 2017 State of DevOps Report. The State of DevOps Report is published every year by the Accelerate authors. It surveys a large number of organizations and asks them about their development practices including the 4 metrics described earlier. The results are then analyzed and organizations clustered by those four metrics into low performers, medium performers, and high performing organizations.

As you can see, high performing organizations develop software at a much faster rate. The deployment frequency is significantly higher. They’re deploying multiple times a day into production. The lead time is quite short as well, under an hour.

But what’s really interesting is that these organizations are also developing software in a much more reliable way. The mean time to recover is less than an hour, and also the change failure rate is significantly less. High performing organizations deliver software rapidly and reliably. It’s common for high performance IT organizations at large companies to deploy 100s or 1000s of times per day. Apparently, Amazon deploys every 0.6 seconds!

Rapid, frequent and reliable delivery => better business outcomes

High performing IT organizations are delivering software rapidly, frequently and reliably. But what’s more, according to the research done by the Accelerate authors, high performing IT organizations result in better business performance as measured by profitability, productivity, and market share. In other words, today, in order for a business to be successful, it needs to have mastered software development.

What’s next

In the next post, I’ll discuss another challenge for IT: the need for sustainable development.

Premium content now available for paid subscribers at

Premium content now available for paid subscribers at